The content in this article is a bit dry and difficult, but you only need to pay attention to the logic, not the math details1.

Now that we know the distribution of the sample mean $\bar{X}$, the next question comes naturally: what is the sampling distribution of the sample variance $S^2$? We do not really have a theorem to tell us about the distribution of the sample variance, so we have to figure it out by ourselves. Similarly, this distribution allows us to calculate the following type of probability:

- We take a random sample ($n=10$) from a population with a known variance, say, 25. We make some calculation and see that the sample variance is, say, 20. Then what is the probability of observing a sample ($\boldsymbol{n=10}$) with the sample variance equal to 20 or more extreme?

Again, soon we will see why we are interested in this type of question and how the answer to it can provide us some knowledge about the population. The sampling distribution of the sample variance is just what we need to calculate the probability.

At first it seems to be impossible for us to figure out the sampling distribution of the sample variance out of nowhere. However, with some reasonable assumptions it is actually approachable. Let’s start with a list of what we have in hand to clarify our task:

- We have a population with an unknown distribution: $X \sim \textmd{some distribution}$

- The population variance is known: $\mathbb{V}\textmd{ar}(X) = \sigma^2$

- We have a random sample with size $n$. Recall from what we leant in Lecture 15, a random sample with size $n$ can be treated as $n$ independent and identically distributed (i.i.d.) random variables. That is, we have $n$ independent random variables $X_1, X_2, \cdots, X_{n-1}, X_n$ whose distributions are all the same as $X$, the population.

- The sample variance is calculated as $S^2=\cfrac{1}{n-1}\sum_{i=1}^{n}(X_i-\bar{X})^2$, where $\bar{X}$ is the sample mean.

Apparently, $S^2$ is a random variable, just like $\bar{X}$. What we want here is to figure out the distribution of the random variable $S^2$. For a start, let’s see how the sample variance is calculated:

$$S^2 = \cfrac{1}{n-1}\left[ (X_1 - \bar{X})^2 + (X_2 - \bar{X})^2 + \cdots + (X_{n-1} - \bar{X})^2 + (X_n - \bar{X})^2 \right]$$

At this moment, we could simply ignore the coefficient $n-1$, which is just a constant. The variable part is the sum of $n$ terms with the same structure: $(X_i - \bar{X})^2$. Since the random variables $X_1, X_2, \cdots, X_{n-1}, X_n$ are i.i.d., each term $(X_i - \bar{X})^2$ should have the same type of distribution. Therefore, if we could figure out the distribution of one of the term, say $(X_1 - \bar{X})^2$, we could get the distribution of every $(X_i - \bar{X})^2$. After that, maybe we could figure out the distribution of the sum of them, which is the distribution of $S^2$.

The Standard Normal Squared

Now we need to figure out the distribution of one of the term. It is difficult to do this in general. However, we could stick to our principle that we talked about repeatedly: whenever we start to do something new, always, always start with something simple to get an intuition. Therefore, let’s work out a simplified version of $(X_i - \bar{X})^2$.

From Lecture 13 and Lecture 15, we know that $\bar{X}$ follows a normal distribution. Since a linear function of a normal random variable is still a normal random variable, $-\bar{X}$, that is, $-1 \times \bar{X}$ is a normal random variable. Since $X_1, X_2, \cdots, X_{n-1}, X_n$ are a random sample from the population, they all have the same distribution as the population ($X$). Therefore, if the population has a normal distribution $X \sim \mathcal{N}(\mu, \sigma^2)$, then each of the $X_i$ is also normally distributed:

$$X_i \sim \mathcal{N}(\mu, \sigma^2) \textmd{, } i = 1,2,\cdots,n-1,n$$

Then each of $X_i - \bar{X}$, that is $X_i + (-\bar{X})$, is also a normal random variable, because the sum of two normal random variable are still normal. What we want is $(X_i - \bar{X})^2$. Therefore, it seems we need to figure out the distribution of a normal random variable squared. What is the simplest normal random variable? Right … it is a standard normal random variable $\mathcal{N}(0,1)$.

Let’s formalise our task so far. We have a standard normal random variable $Z \sim \mathcal{N}(0,1)$ and let the new random variable $U = Z^2$, we need to figure out the PDF of $U$. If you read enough Extra Reading Material from previous lectures, you will know that it is difficult to work directly on the PDF. The trick here is to start with the CDF. Then we take the derivative of the CDF to get the PDF. We begin with:

$$ \begin{aligned} \mathbb{F}_{U}(u) &= \mathbb{P}(U \leqslant u) \\ &= \mathbb{P}(Z^2 \leqslant u) \\ &= \mathbb{P}(-\sqrt{u} \leqslant Z \leqslant \sqrt{u}) \end{aligned} $$

Since $Z$ is a standard normal random variable:

$$f_Z(z) = \cfrac{1}{\sqrt{2\pi}}\,e^{-\frac{z^2}{2}}$$

Therefore, we have:

$$ \begin{aligned} \mathbb{F}_{U}(u) &= \mathbb{P}(-\sqrt{u} \leqslant Z \leqslant \sqrt{u}) \\[7.5pt] &= \int_{-\sqrt{u}}^{\sqrt{u}}f_Z(z)\mathrm{d}z \\[10pt] &= \int_{-\sqrt{u}}^{\sqrt{u}} \cfrac{1}{\sqrt{2\pi}}\,e^{-\frac{z^2}{2}} \mathrm{d}z \\[10pt] &= \cfrac{1}{\sqrt{2\pi}}\int_{-\sqrt{u}}^{\sqrt{u}}e^{-\frac{z^2}{2}} \mathrm{d}z \end{aligned} $$

Since $f_Z(z) = \cfrac{1}{\sqrt{2\pi}}\,e^{-\frac{z^2}{2}}$ is an even function, symmetrical around $z=0$, the above formula becomes2:

$$\mathbb{F}_{U}(u) = \cfrac{1}{\sqrt{2\pi}} \cdot 2 \int_{0}^{\sqrt{u}}e^{-\frac{z^2}{2}} \mathrm{d}z$$

Now use the change-of-variable technique. Let $z = \sqrt{t} = t^{\frac{1}{2}}$, and hence $\mathrm{d}z = \frac{1}{2}t^{-\frac{1}{2}}\mathrm{d}t$. In addition, $z=0 \Rightarrow t=0$ and $z = \sqrt{u} \Rightarrow t = u$. Therefore, the above equation becomes:

$$ \begin{aligned} \mathbb{F}_{U}(u) &= \cfrac{1}{\sqrt{2\pi}} \cdot 2 \int_{0}^{\sqrt{u}}e^{-\frac{z^2}{2}} \mathrm{d}z \\[10pt] &= \cfrac{2}{\sqrt{2\pi}} \int_{0}^{u}e^{-\frac{t}{2}} \cfrac{1}{2}\,t^{-\frac{1}{2}}\mathrm{d}t \\[10pt] &= \cfrac{1}{\sqrt{2\pi}} \int_{0}^{u}t^{-\frac{1}{2}}e^{-\frac{t}{2}}\mathrm{d}t \end{aligned} $$

We are getting somewhere. Now to find out the PDF of $U$, we just need to take the derivative of $F_{U}(u)$:

$$ \begin{aligned} \mathbb{F}_{U}^{\prime}(u) &= \cfrac{\mathrm{d} \left( \cfrac{1}{\sqrt{2\pi}} \int_{0}^{u}t^{-\frac{1}{2}}e^{-\frac{t}{2}}\mathrm{d}t \right) }{\mathrm{d}u} \\[10pt] &= \cfrac{1}{\sqrt{2\pi}} \cfrac{\mathrm{d} \int_{0}^{u}t^{-\frac{1}{2}}e^{-\frac{t}{2}}\mathrm{d}t }{\mathrm{d}u} \end{aligned} $$

Recall The Fundamental Theorem of Calculus tells us that:

$$\cfrac{\mathrm{d}}{\mathrm{d}x} \int_{a}^{x}f(t)\mathrm{d}t = \mathbb{F}^{\prime}(x)=f(x)$$

So we have:

$$ \mathbb{F}_{U}^{\prime}(u) = f_{U}(u) = \cfrac{1}{\sqrt{2\pi}} \,u^{-\frac{1}{2}}e^{-\frac{u}{2}} $$

Sum of Standard Normal Squared

Now we have figured out the distribution of one standard normal squared ($U$), which is really nice. However, the sample variance contains the sum of many squared normal random variables. We need to keep going to add more. Let’s do this in an incremental style, starting with the sum of two standard normal squared.

Let $Z_1$ and $Z_2$ be two independent standard normal random variables. We further define $U_1 = Z_1^2$ and $U_2 = Z_2^2$. Therefore, based on the PDF of one standard normal squared that we just derived, we have:

$$f_{U_1}(u_1) = \cfrac{1}{\sqrt{2\pi}} \,u_1^{-\frac{1}{2}}e^{-\frac{u_1}{2}} \textmd{ and } f_{U_2}(u_2) = \cfrac{1}{\sqrt{2\pi}} \,u_2^{-\frac{1}{2}}e^{-\frac{u_2}{2}}$$

Now let the new random variable $V = Z_1^2 + Z_2^2 = U_1 + U_2$. Our task is to figure out the PDF of $V$.

Again, if you have read the Extra Reading Material from Lecture 13, you should know the convolution formula as follows:

$$f_S(s) = \int_{-\infty}^{\infty}f_X(x)f_Y(s-x)\mathrm{d}x$$

where $X$ and $Y$ are two independent random variables and $S = X + Y$. If we put $U_1$, $U_2$ and $V$ into the convolution formula, we have:

$$ \begin{aligned} f_V(v) &= \int_{-\infty}^{\infty}f_{U_1}(u_1)f_{U_2}(v-u_1)\mathrm{d}{u_1} \\[7.5pt] &= \int_{-\infty}^{\infty} \cfrac{1}{\sqrt{2\pi}} \,u_1^{-\frac{1}{2}}e^{-\frac{u_1}{2}} \cfrac{1}{\sqrt{2\pi}} \,(v-u_1)^{-\frac{1}{2}}e^{-\frac{v-u_1}{2}}\\[10pt] &= \cfrac{1}{2\pi} \int_{-\infty}^{\infty} u_1^{-\frac{1}{2}} (v-u_1)^{-\frac{1}{2}}e^{-\frac{v}{2}}\mathrm{d}{u_1} \\[10pt] &= \cfrac{e^{-\frac{v}{2}}}{2\pi} \int_{-\infty}^{\infty} u_1^{-\frac{1}{2}} (v-u_1)^{-\frac{1}{2}} \mathrm{d}{u_1} \end{aligned} $$

Note that $u_1 = z_1^2$ and $u_2 = z_2^2$. Therefore, both $u_1$ and $u_2$ are non-negative. Since $v=u_1+u_2$, the lower and upper bounds for $u_1$ are $0$ and $v$, respectively. Therefore, the general integration from $-\infty$ to $\infty$ in the above equation becomes:

$$ \begin{aligned} f_V(v) &= \cfrac{e^{-\frac{v}{2}}}{2\pi} \int_{-\infty}^{\infty} u_1^{-\frac{1}{2}} (v-u_1)^{-\frac{1}{2}} \mathrm{d}{u_1}\\[10pt] &= \cfrac{e^{-\frac{v}{2}}}{2\pi} \color{blue} \int_{0}^{v} \cfrac{1}{\sqrt{u_1(v-u_1)}}\, \mathrm{d}{u_1} \end{aligned} $$

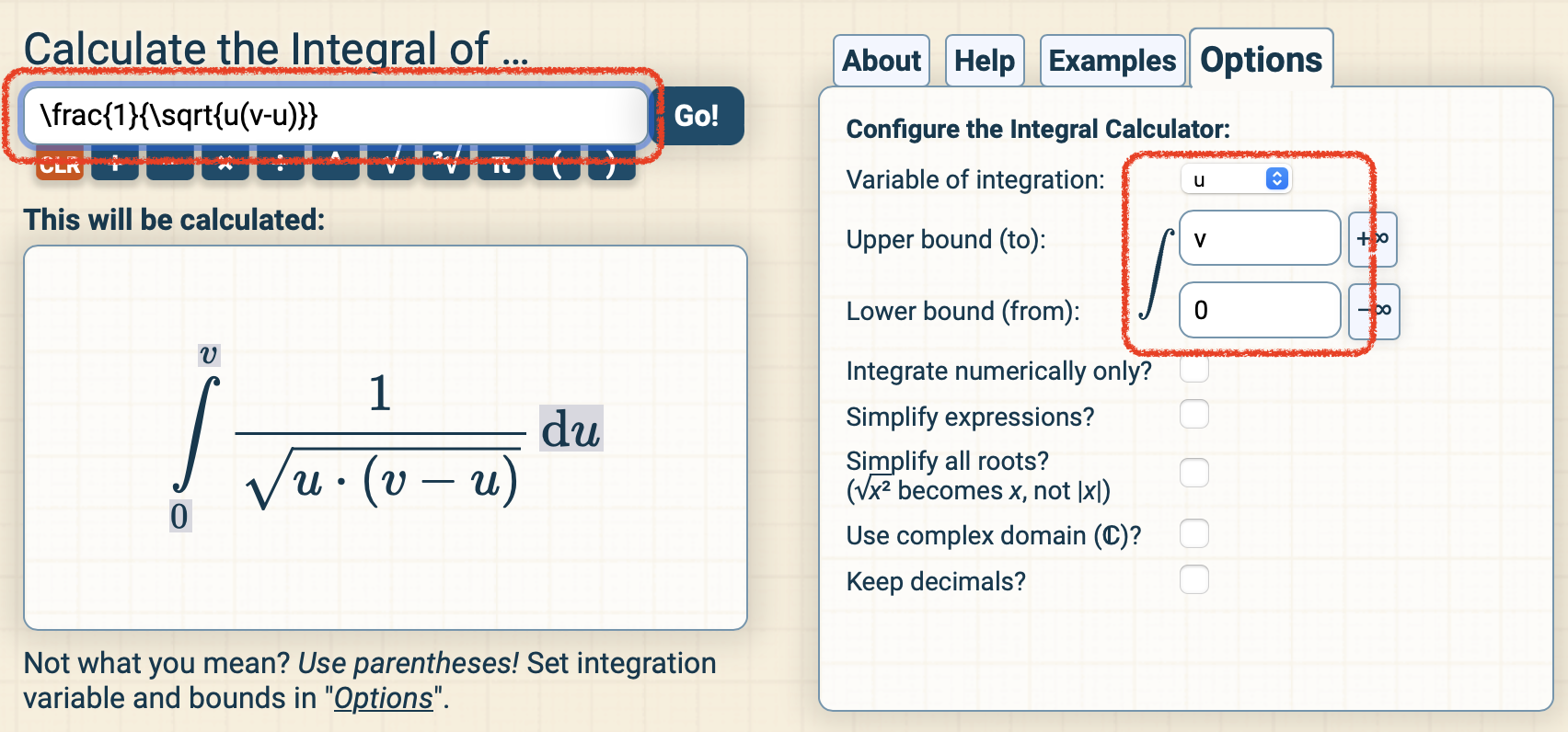

The $\color{blue} \textmd{blue part}$ is a difficult integral. However, with the help of internet, we can solve it. If you put the $\color{blue} \textmd{blue part}$ into the Integral Calculator like this (note the red rectangles):

where you put the $\LaTeX{}$ code \frac{1}{\sqrt{u(v-u)}} into the input box, and at the right-hand side, tell the calculator your variable is $u$ and you are integrating from $0$ to $v$. The calculator will tell you that the $\color{blue} \textmd{blue part}$ is equal to $\pi$3. Therefore, we have:

$$ \begin{aligned} f_V(v) &= \cfrac{e^{-\frac{v}{2}}}{2\pi} \color{blue} \int_{0}^{v} u_1^{-\frac{1}{2}} (v-u_1)^{-\frac{1}{2}} \mathrm{d}{u_1}\\[10pt] &= \cfrac{e^{-\frac{v}{2}}}{2\pi} \cdot \pi \\[10pt] &= \cfrac{1}{2}\,e^{-\frac{v}{2}} \end{aligned} $$

Nice! We have derived the PDFs of one standard normal squared and the sum of two independent standard normal squared. Starting from here, we can use those results and keep deriving the sum of three, four and more independent standard normal squared. In general, when $n$ independent standard normal squared get summed up together, the PDF of the sum is:

$$f_X(x) = \cfrac{1}{\Gamma \left( \frac{n}{2} \right)2^{\frac{n}{2}}}\,x^{\frac{n}{2}-1}e^{-\frac{x}{2}}, \textmd{ where } x \geqslant 0 $$

The $\Gamma$ symbol denotes the gamma function:

$$\Gamma(\alpha) = \int_{0}^{\infty}t^{\alpha-1}e^{-t}\mathrm{d}t, \textmd{ where } \alpha>0$$

This distribution is called the $\chi^2$ distribution. The PDF was first derived by the German mathematician Friedrich Robert Helmert using induction. It was rediscovered by the English statistician Karl Pearson who proposed the name $\chi^2$. The $x$-like letter $\chi$ is the Greek letter pronounced chi. You can call it the chi-square or the chi-squared distribution. It only has one parameter: the degree of freedom4, which is the number of independent standard normal squared in the sum in this context.

For example, in the previous examples, the random variable $U$ follows a $\chi^2$ distribution with a degree of freedom of $1$, because it consists of only one standard normal squared. We write it as $U \sim \chi^2(1)$. The random variable $V$ follows a $\chi^2$ distribution with a degree of freedom of $2$, because it is the sum of two standard normal squared. We write it as $V \sim \chi^2(2)$. Generally, we can see if $Z_1, Z_2, \cdots, Z_{n-1}, Z_n$ are independent standard normal random variables, then:

$$ \begin{aligned} U_1 \sim \chi^2(1), & \textmd{ where } U_1 = Z_1^2 \\ U_2 \sim \chi^2(2), & \textmd{ where } U_2 = Z_1^2 + Z_2^2 \\ & \vdots \\ U_{n-1} \sim \chi^2(n-1), & \textmd{ where } U_{n-1} = Z_1^2 + Z_2^2 + \cdots + Z_{n-1}^2 \\ U_n \sim \chi^2(n), & \textmd{ where } U_n = Z_1^2 + Z_2^2 + \cdots + Z_{n-1}^2 + Z_n^2 \end{aligned} $$

The Sampling Distribution of the Sample Variance

Finally, we are ready to talk about the sampling distribution of the sample variance. Since we know the distribution of the sum of independent standard normal squared, it is relative easy to analyse the sum of independent normal random variables in general.

If the population follows a normal distribution $X \sim \mathcal{N}(\mu, \sigma^2)$, when we have a sample $X_1, X_2, \cdots, X_{n-1}, X_n$, they are i.i.d. random variables with $\mathcal{N}(\mu, \sigma^2)$. We can easily convert them into standard normal:

$$\cfrac{X_i - \mu}{\sigma} \sim \mathcal{N}(0,1), \textmd{ where } i=1,2,\cdots,n-1,n$$

Therefore, according to what we just did in the previous section, we know that each of the $\left( \cfrac{X_i - \mu}{\sigma} \right)^2$ follows a $\chi^2$ distribution with degree of freedom of $1$. If we sum them up together, we have:

$$\sum_{i=1}^{n} \left( \cfrac{X_i - \mu}{\sigma} \right)^2 \sim \chi^2(n)$$

However, this is not really helpful, because we need the sample mean to calculate the sample variance. One common trick is to introduce the term we need and subtract it at the same time. Therefore, we could manipulate the left-hand side as follows:

$$ \begin{aligned} \sum_{i=1}^{n} \left( \cfrac{X_i - \mu}{\sigma} \right)^2 &= \sum_{i=1}^{n} \left( \cfrac{X_i - \bar{X} + \bar{X} - \mu}{\sigma} \right)^2 = \sum_{i=1}^{n} \left[ \cfrac{(X_i - \bar{X}) + (\bar{X} - \mu)}{\sigma} \right]^2 \\[12.5pt] &= \sum_{i=1}^{n} \left[ \cfrac{(X_i-\bar{X})^2 + (\bar{X} - \mu)^2 + 2(X_i-\bar{X})(\bar{X} - \mu)}{\sigma^2} \right] \\[12.5pt] &= \sum_{i=1}^{n} \left( \cfrac{X_i - \bar{X}}{\sigma} \right)^2 + \sum_{i=1}^{n} \left( \cfrac{\bar{X} - \mu}{\sigma} \right)^2 + \cfrac{2(\bar{X}-\mu)}{\sigma^2}\sum_{i=1}^{n}(X_i-\bar{X}) \end{aligned} $$

Note that $\sum_{i=1}^{n}(X_i-\bar{X}) = \sum_{i=1}^{n}X_i - \sum_{i=1}^{n}\bar{X} = n\bar{X} - n\bar{X}=0$, so the last term of the above equation is $0$. Then we have:

$$ \begin{aligned} \sum_{i=1}^{n} \left( \cfrac{X_i - \mu}{\sigma} \right)^2 &= \sum_{i=1}^{n} \left( \cfrac{X_i - \bar{X}}{\sigma} \right)^2 + \sum_{i=1}^{n} \left( \cfrac{\bar{X} - \mu}{\sigma} \right)^2 \\[12.5pt] &= \sum_{i=1}^{n} \left( \cfrac{X_i - \bar{X}}{\sigma} \right)^2 + \sum_{i=1}^{n} \left( \cfrac{\bar{X} - \mu}{\sigma} \right)^2 \\[12.5pt] &= \sum_{i=1}^{n} \left( \cfrac{X_i - \bar{X}}{\sigma} \right)^2 + n \left( \cfrac{\bar{X} - \mu}{\sigma} \right)^2 \\[12.5pt] &= \color{blue} \sum_{i=1}^{n} \left( \cfrac{X_i - \bar{X}}{\sigma} \right)^2 + \color{red} \left( \cfrac{\bar{X} - \mu}{\sigma/\sqrt{n}} \right)^2 \end{aligned} $$

Remember that the above formula follows a $\chi^2(n)$, which is the sum of $n$ independent standard normal squared. Also note that according to the central limit theorem, $\bar{X} \sim \mathcal{N}(\mu_{\bar{X}}=\mu, \sigma^2_{\bar{X}}=\sigma^2/n)$. Then we have $\cfrac{\bar{X}-\mu}{\sigma/\sqrt{n}} \sim \mathcal{N}(0,1)$. Therefore, by definition, the $\color{red} \textmd{red term}$ follows $\chi^2(1)$, and hence the $\color{blue} \textmd{blue term}$ follows $\chi^2(n-1)$.

Now we need to manipulate the $\color{blue} \textmd{blue term}$ to see the sample variance there, which is straightforward:

$$ \begin{aligned} \sum_{i=1}^{n} \left( \cfrac{X_i - \bar{X}}{\sigma} \right)^2 &= \cfrac{1}{\sigma^2} \sum_{i=1}^{n} (X_i - \bar{X})^2 = \cfrac{n-1}{\sigma^2} \cdot \cfrac{1}{n-1}\sum_{i=1}^{n} (X_i - \bar{X})^2 \\[12.5pt] &= \cfrac{(n-1)S^2}{\sigma^2} \end{aligned} $$

Therefore, we finally figure out:

$$ \cfrac{(n-1)S^2}{\sigma^2} \sim \chi^2(n-1) $$

You see, we do not really get the distribution for $S^2$ per se. What we get is $\cfrac{(n-1)S^2}{\sigma^2}$. It follows $\chi^2(n-1)$ which has a defined PDF, so we could calculate related probabilities.

In future lectures, we will see how we can use this distribution for probability calculation and statistical inferences.

What If The Population Is Not Normal

So far, we only solved the simpler case by assuming $X_1,X_2,\cdots,X_{n-1},X_n$ are all normally distributed. They are a sample from a normal population $X \sim \mathcal{N}(\mu, \sigma^2)$. What if the population is not normal?

Well, it is more difficult to work on that5, so we are not going to talk about it in this introductory course.

-

The content in this lecture is not required for the exams. It is good to know the derivation of the PDF of Chi-squared distributions. However, what is more important is the intuition about Chi-squared distributions and where they come from. ↩︎

-

Strictly speaking, what we should do here is $\int_{-\sqrt{u}}^{\sqrt{u}}e^{-\frac{z^2}{2}} \mathrm{d}z = \int_{-\sqrt{u}}^{0}e^{-\frac{z^2}{2}} \mathrm{d}z + \int_{0}^{\sqrt{u}}e^{-\frac{z^2}{2}} \mathrm{d}z$. ↩︎

-

Amazing, isn’t it? I did not know how to do the integration here. However, I have friends who do :-) Thank Dr. Le Chen for helping solve the integral. The integration we need to do is $\int_{0}^{v}\frac{1}{\sqrt{u_1(v-u_1)}}\,\mathrm{d}u_1$. Now we let $u_1=v\sin^2\theta$, then $u_1=0 \Rightarrow \theta=0$ and $u_1=v \Rightarrow \theta=\frac{\pi}{2}$ and also $\mathrm{d}u_1=v\mathrm{d}\sin^2\theta=v2\sin\theta\mathrm{d}\sin\theta = 2v\sin\theta\cos\theta\mathrm{d}\theta$. Now we rewrite the function inside the square root: $u_1(v-u_1) = v\sin^2\theta [v - v\sin^2\theta] = v\sin^2\theta[v(1-\sin^2\theta)] = v^2\sin^2\theta\cos^2\theta$. Then the the integral becomes $\int_{0}^{\frac{\pi}{2}}\cfrac{1}{v\sin\theta\cos\theta}\,2v\sin\theta\cos\theta\mathrm{d}\theta = 2 \cdot \int_{0}^{\frac{\pi}{2}}\mathrm{d}\theta = 2 \cdot \frac{\pi}{2} = \pi$. ↩︎

-

Do not worry about the degree of freedom for now. It has a deep meaning in statistics which I do not fully understand. We will explain it in the next lecture and see it repeatedly in the rest part of the course. ↩︎

-

Actually, it is not that bad as long as the population is “normal” enough or symmetrical. Therefore, in many occasions, it is still okay to use the $\chi^2$-distribution for the sample variance. ↩︎